OpenAI Codex

Privatemode can serve as model provider in OpenAI's agentic AI coding tool Codex. Simply follow the steps below.

Setting up the Privatemode proxy

Follow the quickstart guide to run the Privatemode proxy. Usually, it's advisable to enable prompt caching via --sharedPromptCache.

Configuring Codex

After installation, you can find Codex' configuration file at ~/.codex/config.toml. Add the following to your configuration file, to define a profile for Privatemode:

[model_providers.privatemode]

name = "Privatemode"

base_url = "http://localhost:8080/v1"

[profiles.privatemode]

model_provider = "privatemode"

model = "gpt-oss-120b"

See the official Codex docs for details on available configuration options.

Starting Codex with Privatemode

Once you've added the profile for Privatemode to the configuration file, you can start Codex as follows:

codex --profile privatemode

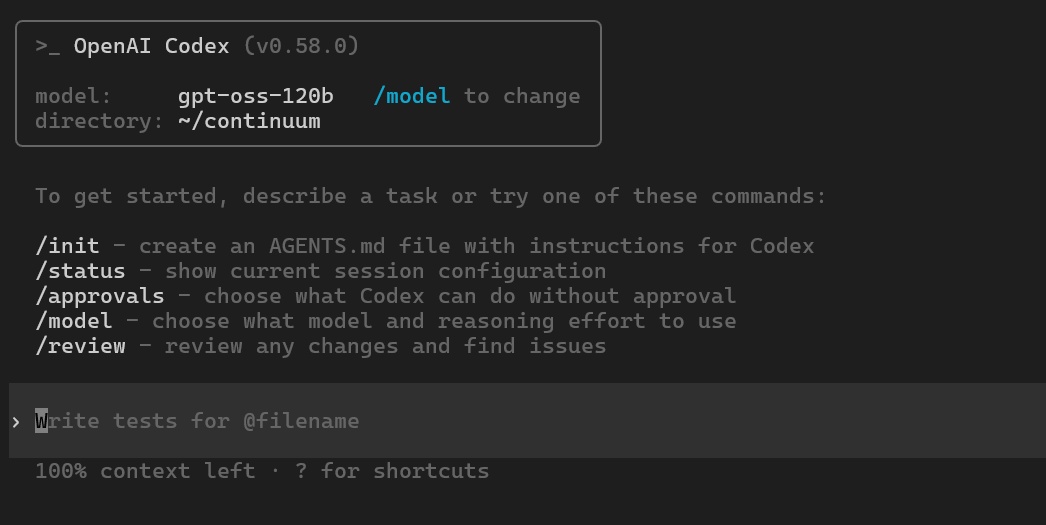

You will be greeted with a screen like the following. You are now ready to "vibe code" confidentially 🥳