Visual Studio Code

Privatemode works with AI coding extensions in Visual Studio Code. The officially supported extensions are GitHub Copilot Chat, Continue, and Cline. We recommend using the Privatemode GitHub Copilot extension for AI chat, editing, and agent mode and the Continue extension for tab completions.

Setting up the Privatemode proxy

Follow the quickstart guide to run the Privatemode proxy. Usually, it's advisable to enable prompt caching via --sharedPromptCache.

Configuring GitHub Copilot Chat

You can use Privatemode via GitHub Copilot with the Privatemode VS Code extension. It integrates the confidential coding models into GitHub Copilot for Chat.

- Install the GitHub Copilot Chat extension from the VS Code Marketplace.

- Install the Privatemode VS Code extension.

- Choose gpt-oss or Qwen3-Coder as described in the extension.

See the extension’s Marketplace page for details.

GitHub Copilot doesn't allow changing the tab completion model. For privacy‑preserving inline completions, use the Continue extension and disable Copilot tab completions as described in the Privatemode extension.

If you’re using a GitHub Copilot Business or Enterprise plan, the Manage Models option may not appear. To enable it, switch temporarily to a Pro or Free plan. This limitation is being addressed by Microsoft.

Configuring Continue

We recommend using Continue for tab completions using Qwen3-Coder.

-

Install the Continue extension from the VS Code Marketplace.

-

Edit

~/.continue/config.yamland add a Privatemode entry undermodel:name: Local Assistant

version: 1.0.0

schema: v1

model:

- name: Privatemode QwenCoder

provider: vllm

model: qwen3-coder-30b-a3b

apiKey: dummy

apiBase: http://localhost:8080/v1

defaultCompletionOptions:

maxTokens: 20

roles:

- autocomplete

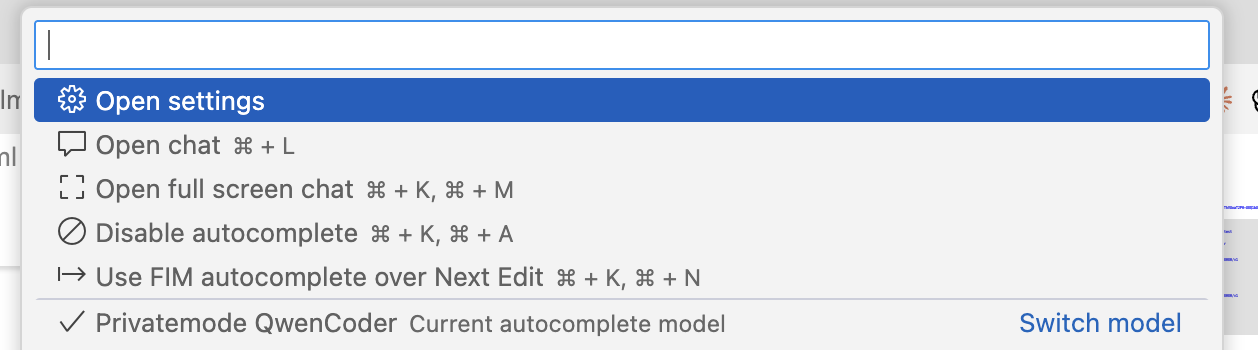

You can now select "Privatemode QwenCoder" as the autocomplete model in the model selector of the extension and use Continue's code completions with the Privatemode AI backend.

Refer to the Continue documentation for additional configuration options.

If you want to use Continue also for editing and chat, create a separate configuration with a different model and increase maxTokens, e.g., to 4096.

Configuring Cline

-

Install the Cline extension from the VS Code Marketplace.

-

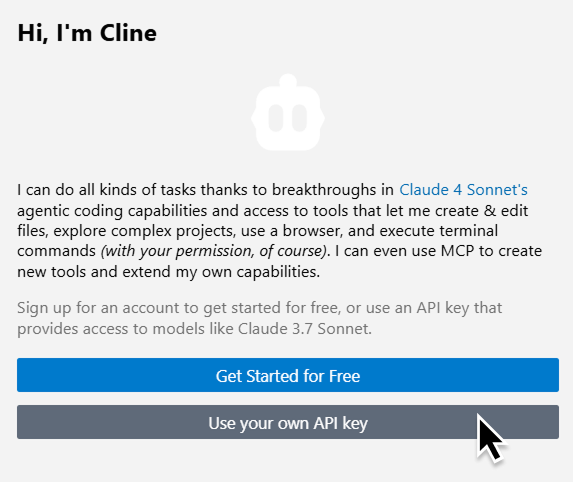

Open the extension settings and select Use your own API key.

-

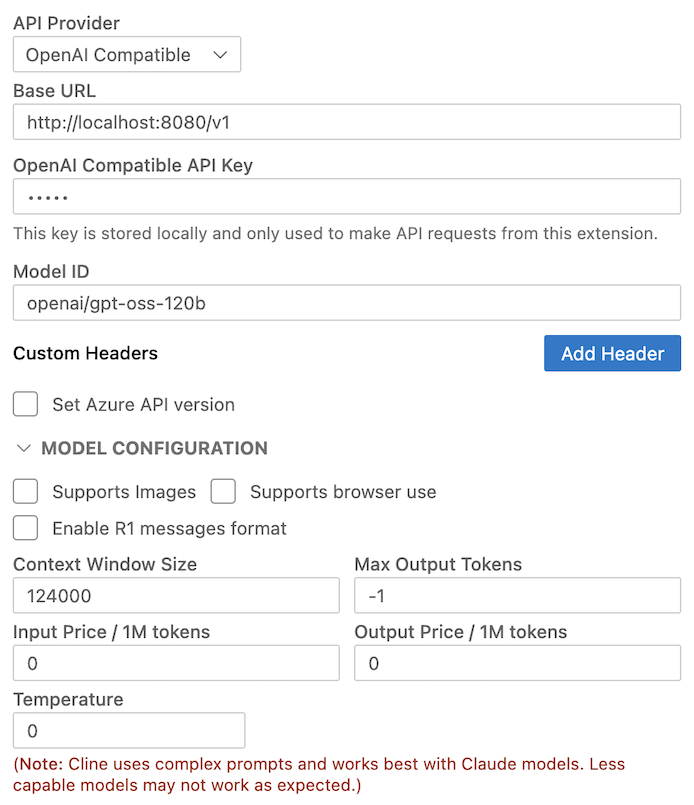

Enter the following details in the UI:

- API Provider: OpenAI Compatible

- Base URL: http://localhost:8080/v1

- API Key: null

- Model ID: gpt-oss-120b

- Context Window Size: 124000

More details on Cline’s provider configuration are available in the Cline docs.